Draft of Colloquium on Emergent States of Quantum Matter

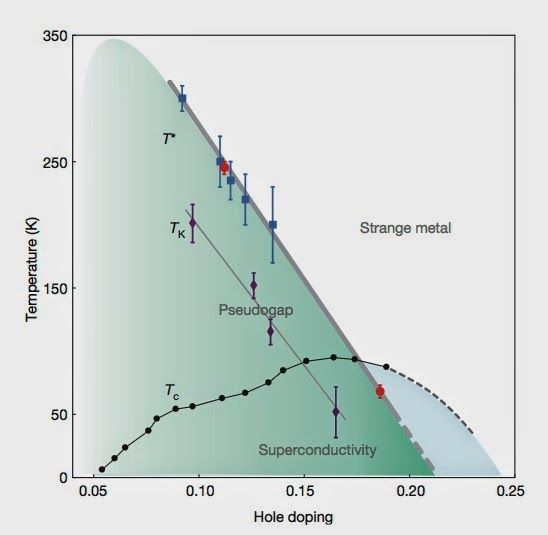

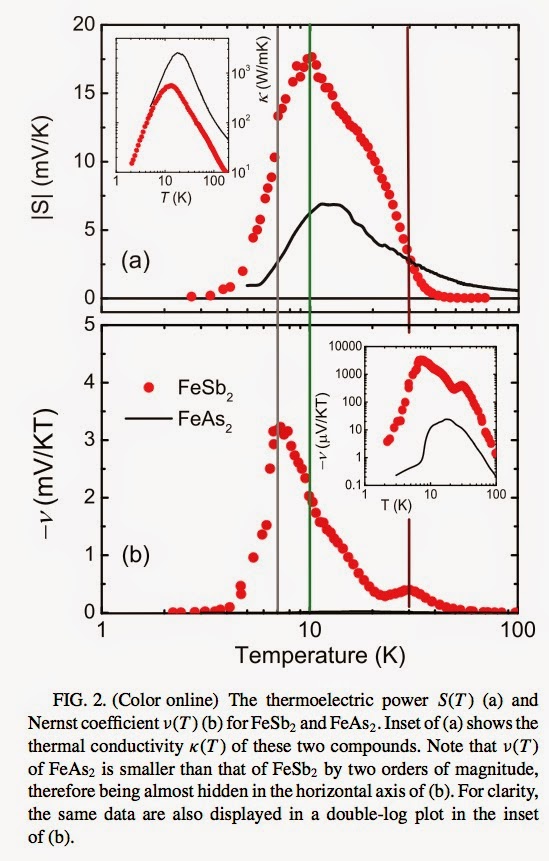

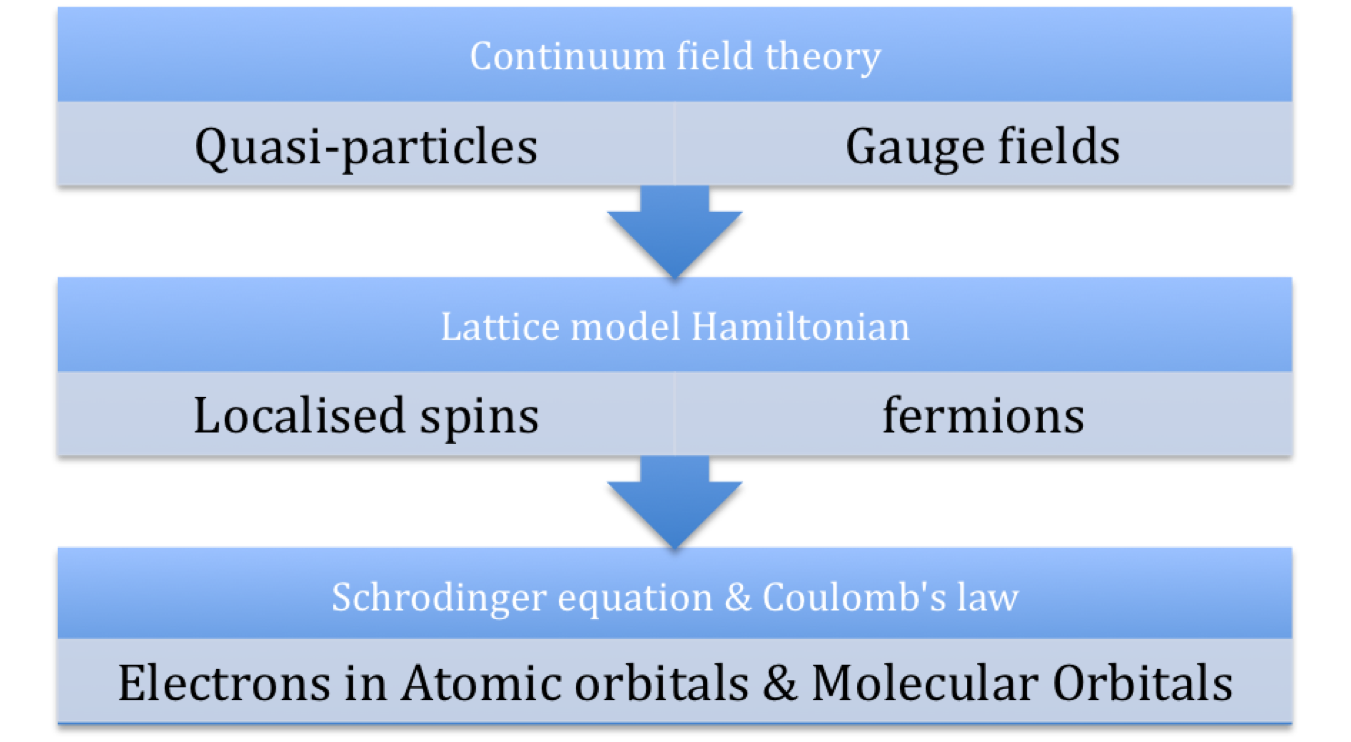

Next week I am giving the Physics Department Colloquium at UQ. I am working hard at trying to follow David Mermin's advice , and make it appropriately basic and interesting. I am tired of listening too many colloquia that are more like specialist research seminars. I would welcome any feedback on what I have done so far. Here is the draft of the abstract. Emergent states of quantum matter When a system is composed of many interacting components new properties can emerge that are qualitatively different from the properties of the individual components. Such emergent phenomena leads to a stratification of reality and of scientific disciplines. Emergence can be particularly striking and challenging to understand for quantum matter, which is composed of macroscopic numbers of particles that obey quantum statistics. Examples included superfluidity, superconductivity, and the fractional quantum Hall effect. I will introduce some of the organising principles for describing such p